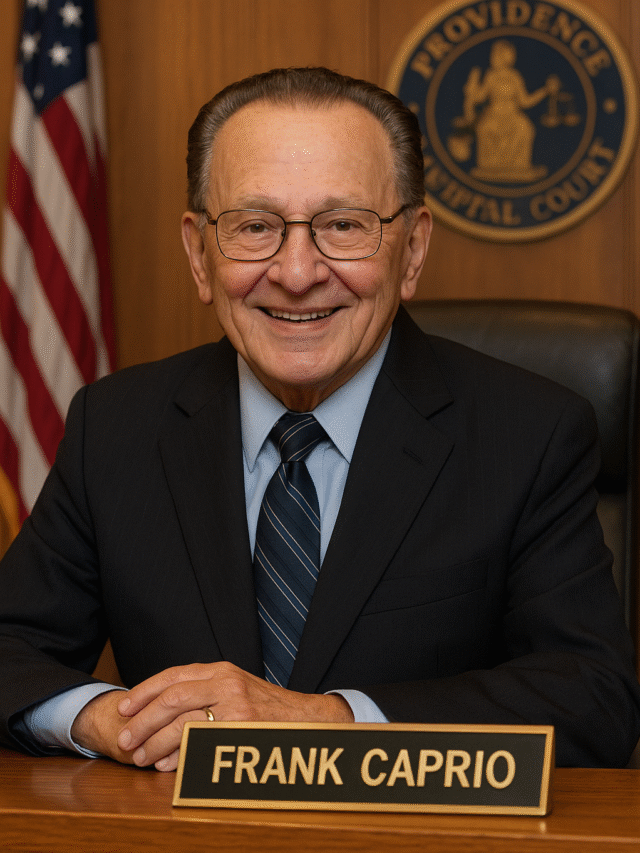

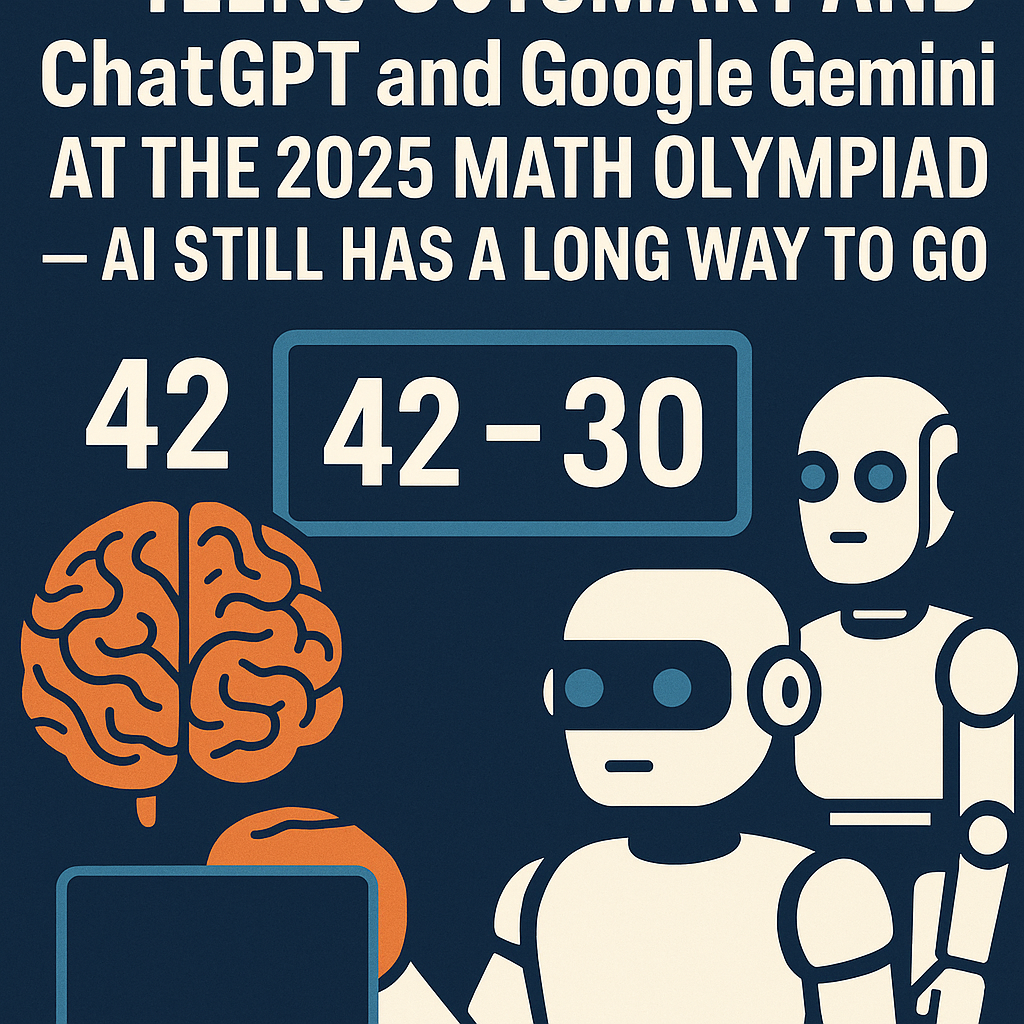

In a surprising twist at the 2025 International Math Olympiad (IMO), five brilliant teenagers achieved perfect scores of 42/42, outperforming world-renowned Teens Outsmart ChatGPT models like ChatGPT and Google Gemini. This result has sparked global conversations about the actual capabilities and limits of artificial intelligence in problem-solving, especially in the field of mathematics education.

What is the International Math Olympiad?

The International Mathematical Olympiad (IMO) is the world’s most Teens Outsmart ChatGPT prestigious mathematics competition for high school students. It challenges participants Teens Outsmart ChatGPT with six extremely difficult problems that test creativity, Teens Outsmart ChatGPT problem-solving ability, and deep mathematical knowledge. The competition is often seen as a benchmark for the highest level of pre-university mathematical talent.

In 2025, the Olympiad was hosted in Queensland, Australia, drawing top talent from over 100 countries.

AI Joins the Competition

This year, something new happened—AI models like ChatGPT and Google Gemini were unofficially challenged with the same problems as the student participants. The goal? To evaluate how well modern artificial Teens Outsmart ChatGPT intelligence systems can perform when faced with creative, logic-intensive problems designed for the world's brightest young minds.

- Google Gemini: Scored 35 out of 42

- ChatGPT: Scored 30 out of 42

- Top Human Students: Five students scored a perfect 42

This comparison was not just for fun—it aimed to highlight where AI truly stands when it comes to deep reasoning, creativity, and abstract thinking.

Why Did AI Fall Short?

Despite being trained on billions of documents and mathematical problems, both Gemini and ChatGPT struggled to solve some of the most complex questions. Here's why:

1. Lack of True Understanding

AI models operate on patterns and probability, not actual understanding. While they can simulate problem-solving Teens Outsmart Teens Outsmart ChatGPT processes, they lack the depth of comprehension and creativity that human students bring to the table.

2. Difficulty With Multi-Step Logical Reasoning

Many Olympiad problems require multi-step logic chains, abstract generalizations, and intuition—areas where AI models often falter. These problems go beyond memorization and require innovative thinking that AI hasn't mastered yet.

3. Inability to ‘Think Outside the Box’

While AI can mimic solutions based on its training data, it lacks the ability to devise truly original solutions. Human students often invent new approaches mid-solution, an area where AI is still limited.

What This Means for the Future of AI in Education

This event underscores that while AI is powerful, it’s not a replacement for human intelligence—at least not yet. The Math Olympiad result offers a reality check for the growing belief that AI can outperform humans in all areas.

AI as a Support Tool

Rather than replacing students or educators, AI should be viewed as an assistive technology. It can explain concepts, offer extra practice, or help visualize problems—but true learning still requires human curiosity and guidance.

The Human Element in Learning

Education is more than just getting correct answers. It involves exploration, failure, perseverance, and sometimes “eureka” moments Teens Outsmart ChatGPT that AI cannot replicate. This is especially true in mathematics, where insight often comes from lived experience and long-term practice.

Reactions from the Tech and Education Communities

The outcome of the Olympiad has sparked interesting reactions worldwide.

“AI is impressive, but this shows it's still no match for the human mind when it comes to originality and logical creativity,” said Dr. Samantha Lim, an AI researcher at MIT.

“This isn’t a loss for AI—it’s a win for education. Our youth are still ahead, and that’s reassuring,” commented Arvind Mahajan, an Olympiad coach from India.

Even Elon Musk reacted on X (formerly Twitter): “AI isn’t magic—it’s math. These kids proved that raw intelligence and creativity still beat the best machines.”

Global Recognition for Student Talent

The five perfect scorers from the 2025 Olympiad are being hailed as international icons of youth brilliance. Among them were students from the U.S., India, China, South Korea, and Romania. Their achievement demonstrates that raw human brainpower continues to lead in fields requiring depth and creativity.

Is AI Learning from This?

Interestingly, these results are likely to be fed back into the AI systems to help them learn and evolve. AI companies like OpenAI and Google are constantly fine-tuning their models, and lessons from these shortcomings will likely be addressed in future versions.

However, true creativity and intuition may never be programmable. And that’s not a weakness—it’s what makes humans beautifully unpredictable.

Final Thoughts: Collaboration, Not Competition

This Olympiad wasn’t just about who scored higher—it was about Teens Outsmart ChatGPTshowing the different strengths of humans and machines. AI has incredible speed, recall, and efficiency. But when it comes to real-world creativity and problem-solving, our brains are still the gold standard.

Instead of racing against AI, the future lies in using it as a collaborator. The perfect learning environment could be one where human intelligence is supported—not replaced—by Teens Outsmart ChatGPT artificial intelligence.

In the end, these teens didn’t just win a math competition—they reminded the world that human brilliance still shines brightest.

Keywords:

Math Olympiad 2025, ChatGPT vs Gemini, AI in education, teens beat AI, Google Gemini performance, AI news 2025

Share this story!

Let the world know that human intelligence still reigns supreme. Use #MathOlympiad2025 #TeensVsAI